LLaVa Add to favorites

Last update time : 2025-09-24 03:53:58

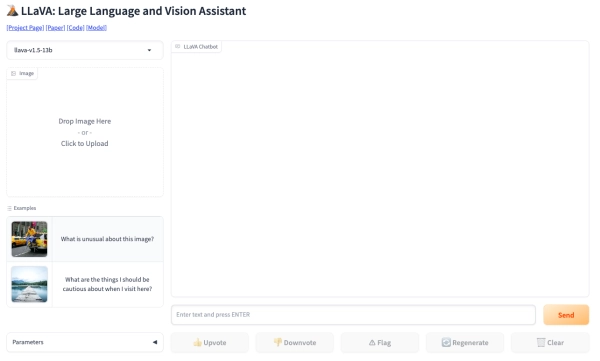

A tool that offers advanced language and vision understanding capabilities.

LLaVA (Large Language and Vision Assistant) is an innovative large multimodal model designed for general-purpose visual and language understanding. It combines a vision encoder with a large language model (LLM), Vicuna, and is trained end-to-end. LLaVA demonstrates impressive chat capabilities, mimicking the performance of multimodal GPT-4, and sets a new state-of-the-art accuracy on Science QA tasks. The tool's key feature is its ability to generate multimodal language-image instruction-following data using language-only GPT-4. LLaVA is open-source, with publicly available data, models, and code. It is fine-tuned for tasks such as visual chat applications and science domain reasoning, achieving high performance in both areas. Recent updates to LLaVA have further enhanced its ability to understand and respond to more complex visual and textual inputs. Its success in interpreting graphs and diagrams from scientific papers, in particular, makes it a valuable tool in the fields of research and education.

Pricing : Free

Web Address : LLaVa

Tags : artificial intelligence large language model multimodal model visual understanding Vicuna free AI tool

Similar AI tools

Copyright Check AI

Polygraf AI

AI Voice Detector

wasitai

Automorphic

LabelGPT

Cerebrium

Landing AI

X-ray Interpreter

Email Ferret

FormX.ai

Illuminarty

AI Tools

- Aggregators

- AI Detection

- Automation & Agents

- Avatar Creators

- Chatbots

- Copywriting

- Finance

- For fun

- Games

- Generative Art

- Generative Code

- Generative Video

- Image Improvement

- Inspiration

- Marketing

- Motion Capture

- Music

- Personal Development

- Podcast

- Productivity

- Prompt Guides

- Research

- Social Media

- Speech to Text

- Text to Speech

- Text to Video

- Translation

- Video Editing

- Visual Scanning & Analysis

- Voice Modulation